I very much enjoy testing web apps by simulating their network requests via code. This allows me to visit websites, login, and replicate functionality, all without a browser, a slightly different sort of testing than many testers are accustomed to. I love to explore what’s happening under the hood when we click elements and submit forms, I like to play with cookies and payloads, I try to find out what bare minimum of data do I need to pass through HTTP requests to recreate a particular user behavior. People often do this kind of testing with Postman but I’ve been accustomed to implementing tests with Ruby and the rest-client gem. Recently though I looked at how Cypress plays with network requests, especially curious about how they take it further with their built-in request stubbing feature using cy.route() because I have never tried stubbing before.

First, some context on HTTP requests:

- GET requests often simulate visiting (or redirecting to) a web page or retrieving a resource (like an image or another file)

- POST requests often simulate a form submission, like logins or payments, and as such deals with passing inputted data in order to proceed to the next application state

- There are other types of HTTP requests but mastering how these two work is enough at the start

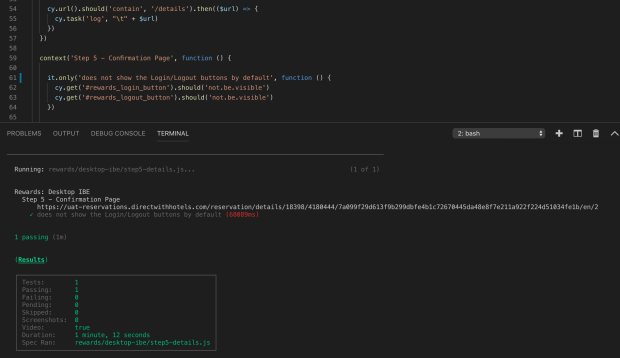

And here is an example of how Cypress helps you perform a said GET request:

and for a POST request:

Pretty straightforward and easy to follow. Notice that POST requests have more information in them than GET requests, since we’re passing data – the body field is concerned with user inputs while the headers field is concerned with the user session, among other things. Of course, both requests need a url field, some place to send the request to.

And when we send a request, we receive a response. That response tells us about how a web application behaved after the request – was the user redirected to another page? was the user able to log in? did an expected web element got displayed or hidden? were we sent to an error page, perhaps?

Cypress takes network requests further by introducing routing to testing. Here’s an example:

What the above code says is that we want to use Cypress as a server and we want to wait and listen for a POST request that’s going to the /login URL after a submit button (with an id of #submitButton) is clicked, after which we want to respond with a { success: false } result. This means that the actual response from our application from that url is going to be taken over by a fake response that we designed ourselves. This is what stubbing a network request looks like.

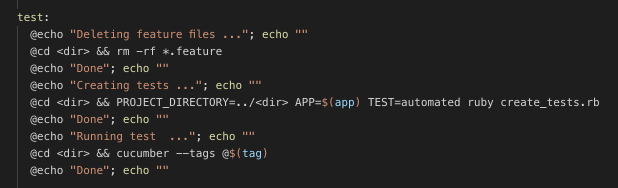

Now why would we want to do this? Some reasons:

- We want to check how an application behaves for scenarios where a request fails to reach the application server. Do we redirect the user? Or do we show an error popup? Or does the application also work offline? To do this without stubbing, we would need some help from a programmer to shut down the app server at the right time after we perform the scenario.

- We want to speed up tests by stubbing the response of some requests with less data than the actual responses deliver. We can even have the response data to be empty, if we don’t necessarily need that specific data for a test.

- We want to see what happens to the application when it receives an incorrect response value from a request.

This is one thing I am loving about Cypress. Out of the box, they allow me to play with network requests alongside testing the user interface, and lets me tinker with it some more.